Getting started

How to configure a data pipe

Datazoom provides an easy way to initiate and manage data collection. Data pipes are used to specify which data to collect and to which destinations to send the data. The steps below illustrate the simple process to create a data pipe by adding collectors and connectors.

Contact Datazoom to learn more!

Overview

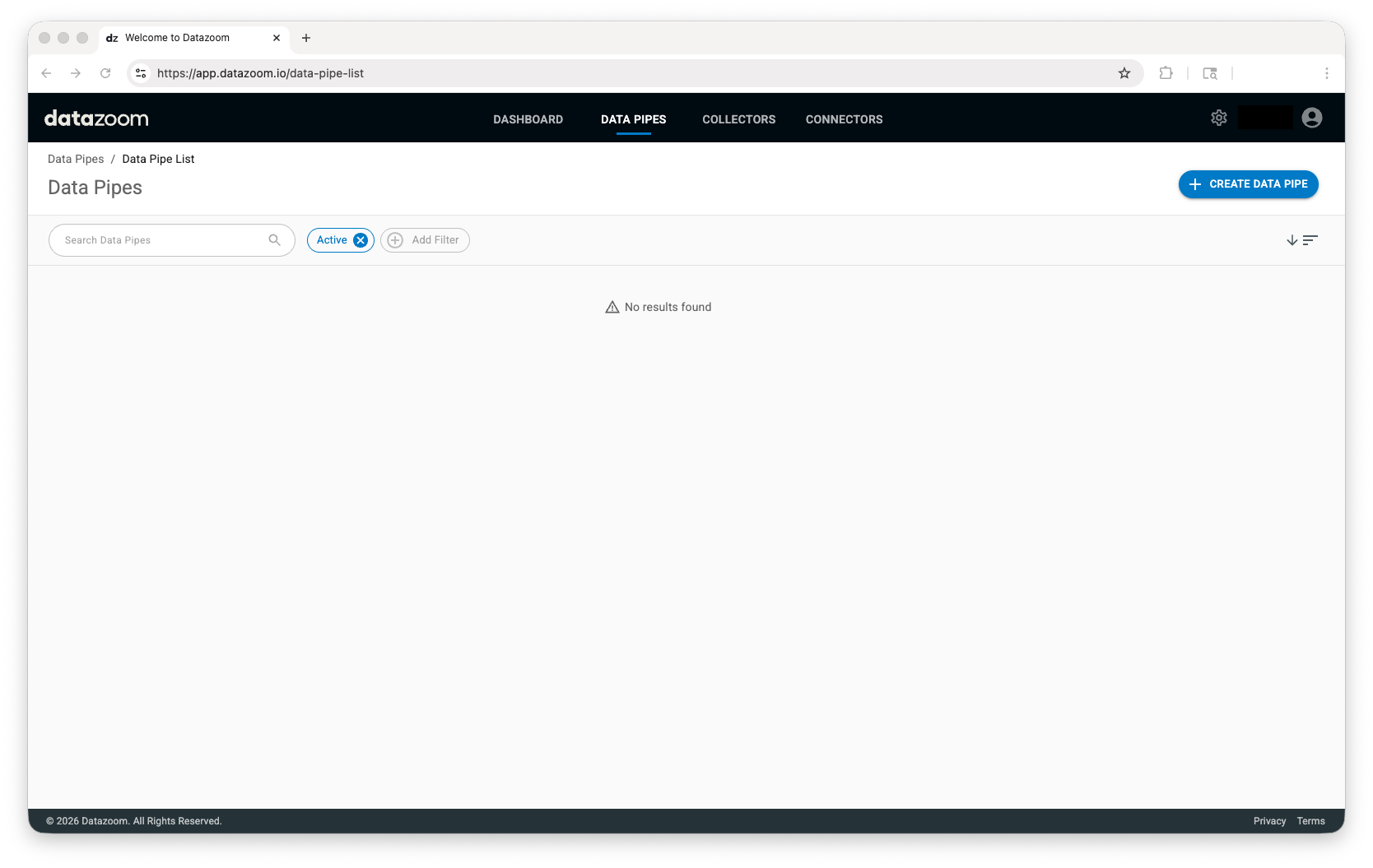

Navigate to the “DATA PIPES” tab

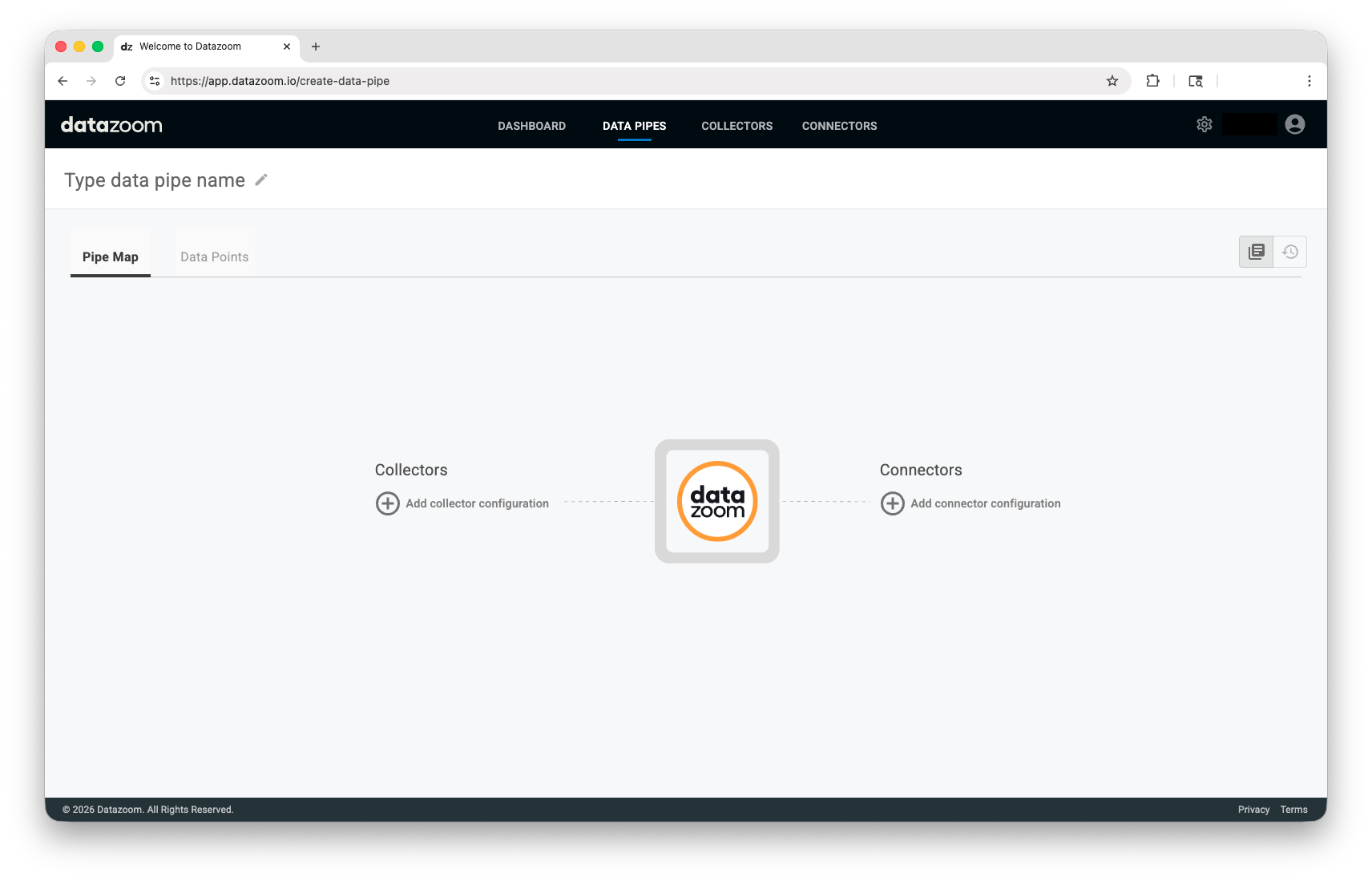

Click the “CREATE DATA PIPE” button to begin the process to add a Data Pipe.Enter a unique name for this Data Pipe

You will see “Type data pipe name” input on the following screen in the upper left.Select a Collector

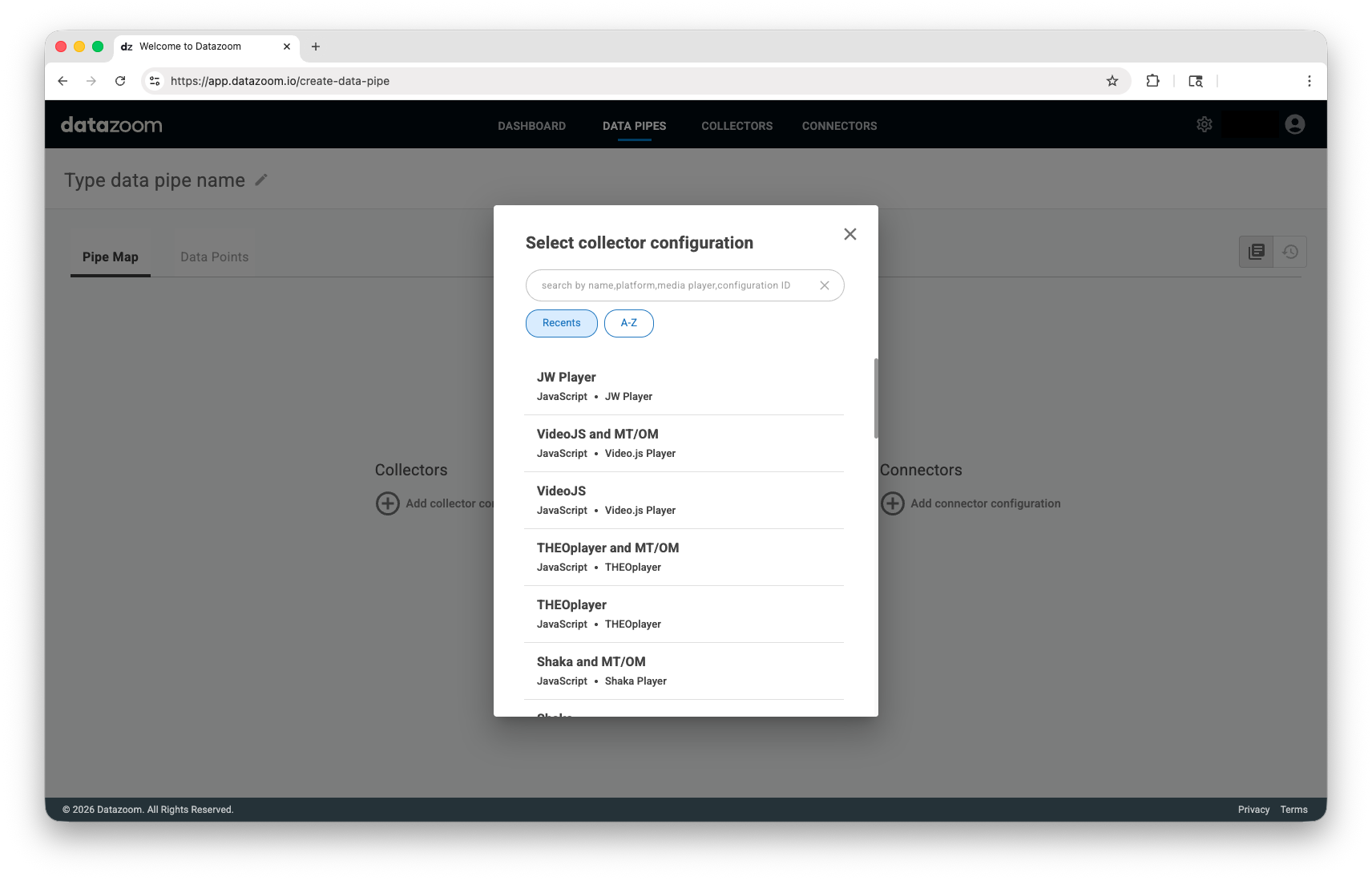

Click the+icon under the “Collectors” heading to bring up a list of your configured collectors. Click on the one that you wish to add to this Data Pipe.Select a Connector

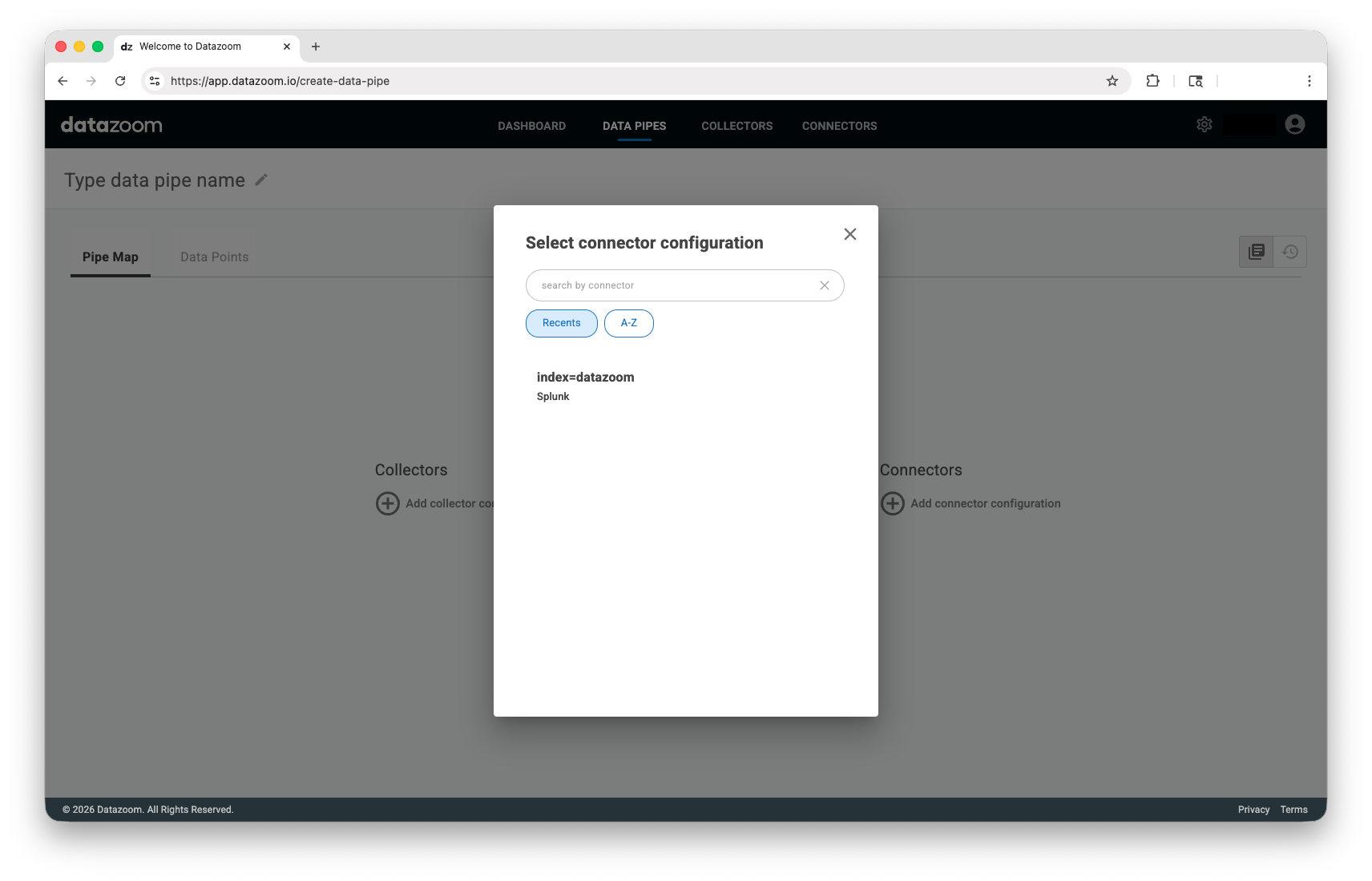

Click the+icon under the “Connectors” heading to bring up a list of your configured connectors. Select the one that you wish to add to this Data Pipe.Select data points

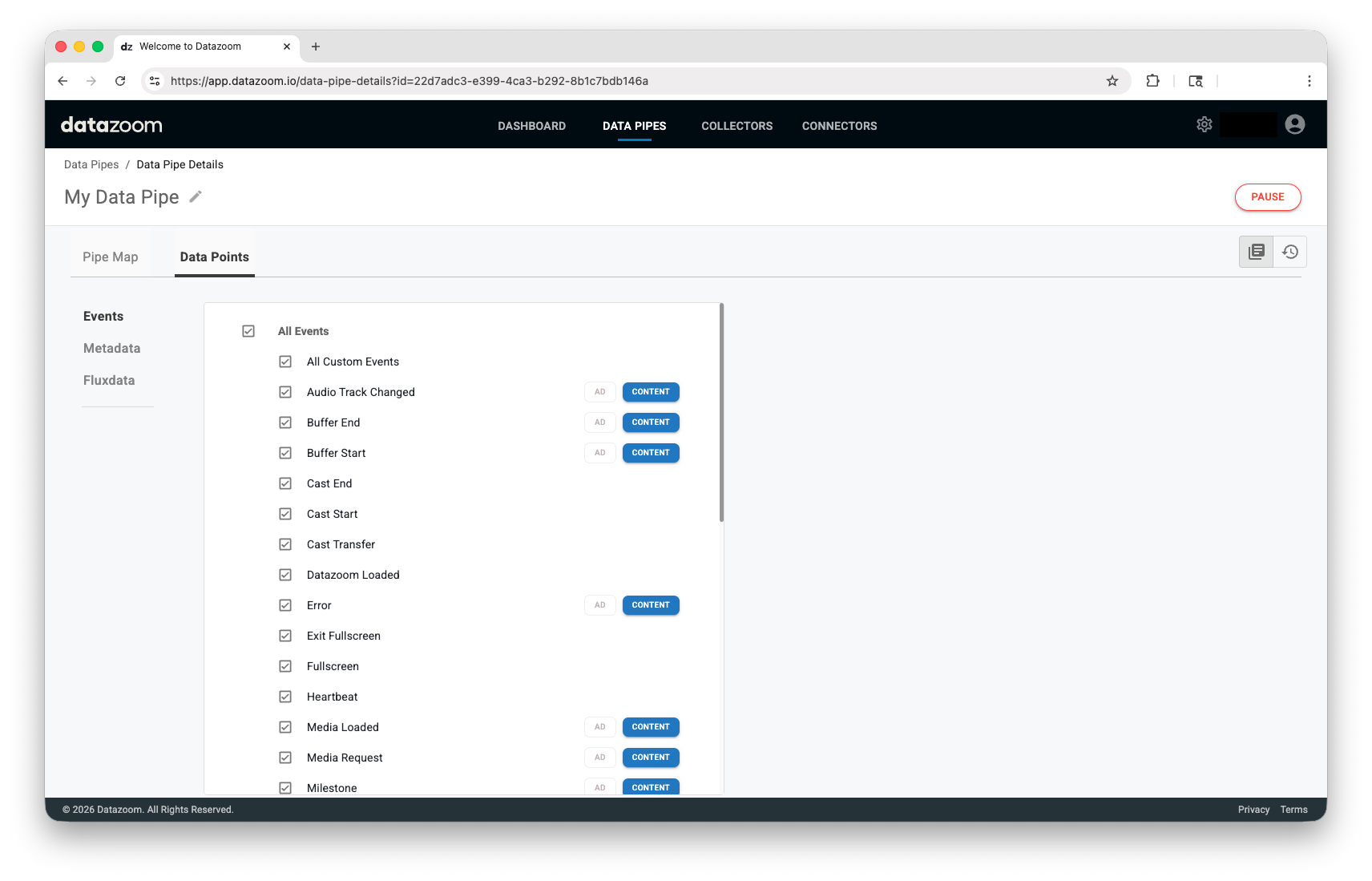

Click the Data Points tab to manage the data points that you wish to collect in this Data Pipe.We're done!

Once you save your Data Pipe, you can continue adding Collectors and Connectors that share the same event collection properties to create a multi-dimensional data collection pipeline.

Data Pipe Specific Data Point Selection

For any given event, the only metadata and fluxdata collected are the data points selected in the specific data pipe(s) where that event is selected for collection so that you can create highly specific data collection strategies from a single collector configuration, significantly reducing data volume without sacrificing valuable insights.

Send a large, context-setting event once, and then follow it with many smaller, minimal events that reference the original context by configuring different data pipes to handle different event payloads:

How It Works: A Practical Example

Data Pipe 1: Initial Session Event

Event: A single, initial event like custom_session_start.

Data: This pipe is configured to collect the full, comprehensive metadata payload. This includes all session-wide context: user details, device information, geo-location, network data, and custom metadata. This event establishes the app_session_id.

Data Pipe 2: Initial Page View Event

Event: This pipe would include events that establish a new, non-video context, such as custom_DOM_pageView.

Data: The payload will include metadata specific to the page-level context (URL, title, etc.) and the app_session_id to link it to the main session. It will exclude the redundant global context data sent in Data Pipe 1.

Data Pipe 3: Subsequent Page Events

Events: This pipe should contain the numerous user interaction events that can happen on a page (e.g., custom_selectMenu, custom_viewCarousel, etc.).

Data: The payload will be minimal, containing only the app_session_id and any metadata unique to that specific action (e.g., custom_menuItemLabel, ongoing session metrics).

Data Pipe 4: Initial Video & Ad Events

Events: Context-setting events like playback_start and ad_impression.

Data: These pipes collect metadata specific to the content or ad being played (e.g., asset_id, ad_creative_id) along with ongoing session metrics. They also include the app_session_id to link back to the main session but exclude the redundant session metadata already sent in Pipes 1 & 2. An identifier is sent here (e.g., content_session_id, ad_id).

Data Pipe 5: Subsequent Playback Events

Events: Frequent, in-playback events like heartbeat, pause, rendition_change, and milestone.

Data: This pipe is configured to be extremely minimal. It collects only the necessary session identifiers (app_session_id, content_session_id, ad_id). All other static metadata is excluded except for ongoing session metrics and any metadata unique to the specific action.

_

By structuring your data pipes this way, ingress payloads can be filtered to save on egress by sending only the bare essentials on each egressed event. In post-processing, you can easily join the lightweight events back to their heavy payload parent events using the session IDs, creating a complete and detailed record of the user journey for analysis.